If you have not yet read Blog 1 of Navigating the Conversational Analytics Maze, I would highly recommend you do so, since it provides the necessary context to help understand most of the examples and concepts in this post.

Welcome back to our series on how to assess the wide variety of AI-powered conversational analytics offerings available in the market today. Last week, we discussed the difficulties of identifying quality AI-powered solutions in a day and age when everyone is jumping on the latest technology bandwagon that is generative AI. Specifically, we looked at accuracy, and why it leaves something to be desired when assessing machine learning (ML) models. We saw how easily accuracy can be “cheated” when dealing with an unbalanced data set. We even saw how a completely worthless model can still show an accuracy of 90%. Thankfully, data scientists are smart, (especially those on the Marchex MIND+ and Analytics teams!), and they have other ways of assessing the true utility of an ML model. So, today let’s learn more about what makes a great AI-powered conversational analytics product by looking at another metric: Precision.

Accuracy vs. Precision – What’s the Difference?

Let us recall what we discussed last week:

- We want to create a machine learning model that can identify frustrated customers by reading a call transcript.

- We are testing our model on a set of 100 calls that have been graded by human listeners.

- Of these 100 calls, 9 have frustrated customers.

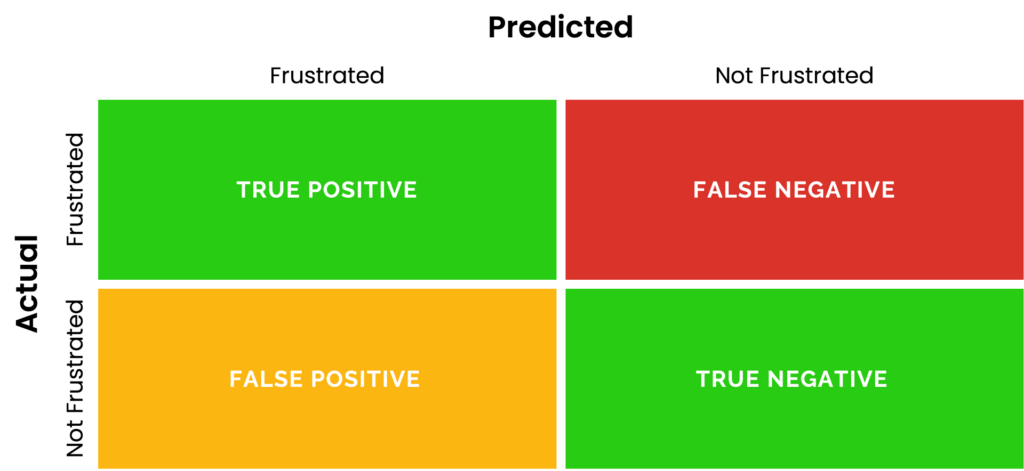

- We can compare the predicted labels from our model with the true labels in a grid aptly called a Confusion Matrix (see below)

- The equation for accuracy is:

Accuracy = Correct Guesses / Total Guesses

When it comes to conversational analytics, unbalanced data – data where some outcomes occur at different rates – represents most of what businesses will encounter. Marchex’s analysis of hundreds of thousands of business-to-customer interactions has shown that less than ten percent of customers will typically express negative emotions on a call. This means we need a robust machine learning model capable of pinpointing frustrated callers.

The reason accuracy can be so misleading is because it simply looks at how many correct labels our model makes (frustrated OR not frustrated). For example, if a naive model were to guess Not Frustrated for all calls, it would achieve 90% accuracy! But is it useful? Hardly. What if we instead assess the fraction of positive guesses that are correct? This fraction is called precision, and its formula is:

Precision = Correct Positive Guesses / Total Positive Guesses

In our example, a “positive guess” is any time the model labels a conversation as Frustrated. So, when our model correctly labels a conversation as Frustrated, this is a True Positive. When the model incorrectly predicts Frustrated, this is a False Positive. Let’s look at our two metrics side by side.

- Accuracy is a measure of how many labels our model got right in general, i.e. how well it was able to label frustrated and non-frustrated customers alike.

- Precision shows us how often our model is right when it says a caller is frustrated. It answers the question: Of all the times we labeled a caller as frustrated, how many times were we right?

Let’s calculate the precision of a model whose results can be seen below.

We can see from the confusion matrix that the model only labeled two conversations as positively registering frustration. One of these labels was correct (True Positive), while the other was not (False Positive). Therefore, the model’s precision comes out to be:

Precision = 1 Correct Positive Guess / 2 Total Positive Guesses = 50%

Oof. This model has an accuracy of 91%, but a measly precision of 50%. Wild how disparate those two metrics can be, right? Fifty percent leaves a lot to be desired, so let’s try to improve our model.

Suppose we evaluate a refined model, and we get the following results:

Our new accuracy:

92 Correct Guesses / 100 Total Guesses = 92%

Our new precision:

2 Correct Positive Labels / 2 Total Positive Labels = 100%

Now we’re talking. It seems like we finally have a useful model. You might even be thinking that there is little left to do in terms of tuning. In all honesty, if the model is performing better than most humans in terms of accuracy and precision, is it necessary to tune any further? I hate to be the bearer of bad news, but unfortunately, precision doesn’t take everything into account. Remember what our motivation behind making this model was? We wanted to find all frustrated callers. While the model was correct every time it labeled a caller as frustrated, it only labeled 2 of the 10 frustrated callers correctly, meaning we had 8 false negatives.

Precision doesn’t account for false negatives, so it can’t tell us if we found all frustrated callers. The natural question to ask is: what is the best way to assess how well our model finds frustration in the first place? I’m glad you asked that, eager reader, and I hope you will join me next week for our last installment of Navigating the Conversational Analytics Maze, where I will introduce Recall, another essential metric used to evaluate ML models.