It has been a little over a year since ChatGPT was released, throwing everyone into a frenzy on how to incorporate the now commoditized technology that is generative AI (GAI) into their everyday workflows. One need not look further than the conversational analytics industry to see this trend in real time, where new GAI-powered products are being churned out left and right, even at a monthly rate by some proud embracers of the so-called revolutionary tool. While GAI undoubtedly has a lot to offer, it seems that businesses are so concerned with adopting the tech that they have overlooked how to ensure they are getting a quality solution that meets their needs. You can’t blame them, since up until now, AI in general has been a relatively niche technology that most business executives aren’t exactly experts on.

This begs the question: How should business leaders be assessing the numerous AI-powered products available in the market today? In this multi-part series, I will break down the relevant metrics so that you can confidently distinguish high-tier conversational analytics AI solutions from the subpar results of AI peer pressure. We’ll be starting our series with accuracy.

What is Accuracy?

To understand what differentiates a “quality” AI model from a lackluster one, we need to understand how these models are scored, and, more importantly, how these scores can be misleading. To do this, let’s use the example of a machine learning (ML) model that is tasked with reading call transcripts and flagging calls in which the callers are frustrated and/or dissatisfied with their received service. In other words, our model would analyze each customer interaction and label it as positive – the model detected frustration/dissatisfaction – or negative – the model detected no frustration/dissatisfaction. It is worthwhile to note that robust AI models will typically take many factors into account when assigning the positive/negative label to a call, such that while the number of data points that influence these decisions may be massive, the outputs of the model would be what we would call binary (true or false).

I would venture to say that most of us have an intuitive grasp of what accuracy is. Mathematically, accuracy can be represented by this equation:

Accuracy = Total Correct Guesses/Total Guesses

Let’s say we have 100 call transcripts which have all been read and graded by humans. The humans determined that, within this set of 100 calls, nine feature frustrated/dissatisfied customers. That means ninety-one callers should be labeled negative for the frustration/dissatisfaction metric, and only nine callers should be labeled positive. I say should because no AI model is perfect, and we can expect to have some error.

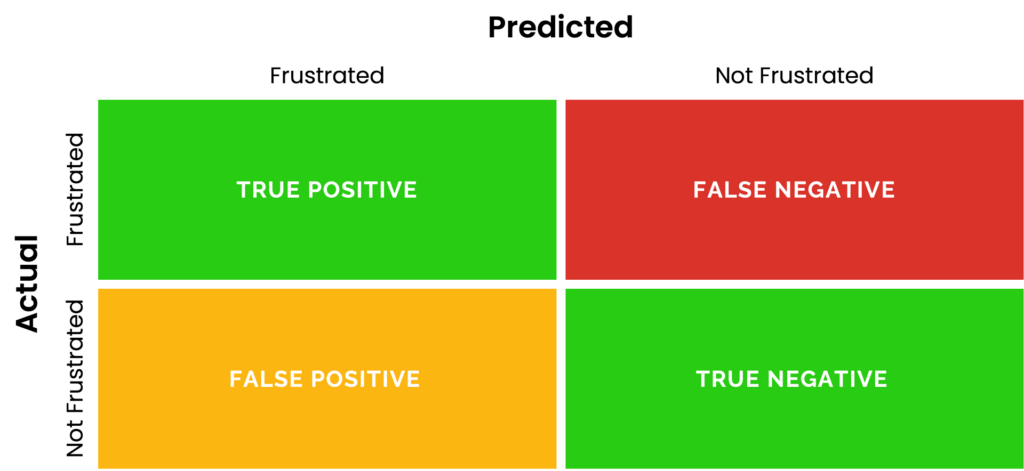

Because we already know how many frustrated callers the model should have found in our sample set, we can construct a grid that pits the true values of our data against the values predicted by our ML model.

If the above grid looks a bit confusing at first, that’s okay. It is, quite literally, called a Confusion Matrix. The left side of the matrix represents the labels attributed to calls by our example model, while the labels above the matrix represent what the calls should be labeled as. So, if a caller was frustrated, and our model successfully labels the caller as a frustrated customer, then the outcome is a True Positive. Likewise, if the caller was frustrated, but the model labeled them as not frustrated, then this is a False Negative. The same logic applies to True Negative and False Positive, respectively. For our example, anything green represents a caller that the AI model labeled correctly (frustrated or not).

Now, let’s say we ran our aforementioned 100 calls through our Frustration/Dissatisfaction model, and we got the following results.

Using the confusion matrix, we can calculate our model’s accuracy by dividing the sum of the positive labels in green by the sum of all the numbers in the matrix.

Accuracy=Total Correct Guesses/Total Guesses=91/100=91%

Wow! A whopping 91% accurate. For reference, human listeners are typically expected to perform at an average accuracy of 90% or better. Pretty great AI model, right?

What if we had a model that never labeled a caller as frustrated/dissatisfied, meaning that the model never made any positive labels of negative emotion. Intuitively, we know that this would make the model worthless, since it isn’t doing the very thing, we want it to but hang with me. Such a model’s accuracy would surely be much worse, no? Well, let’s see. Here we have our updated confusion matrix:

Solving for accuracy, we get:

Accuracy=90/100=90%

Wait. What? This model is absolute garbage and will never ever positively identify any callers as registering dissatisfaction/frustration. However, it is still 90% accurate. What’s the deal here?

The answer is that we are analyzing what is known as biased data. In this case, the bias lies in the fact that the overwhelming majority of our callers expressed no frustration/dissatisfaction, but the customers expressing these negative emotions are exactly the ones we are trying to find.

When it comes to customer interactions, Marchex has found that less than ten percent of customers will express negative emotions on a call. Trying to find all these discontented callers by hand is impractical. It would take ages for humans to sort through every customer interaction to find the ten in a hundred that contain frustrated callers. This makes AI the perfect tool for companies who need to identify where and why they are losing customers and revenue. However, it is now clear that accuracy is certainly not the only metric by which these specialized AI solutions should be evaluated. In fact, if companies purporting to offer advanced conversational analytics solutions are only touting their models’ accuracies, it should raise a red flag.

If this is the case, then what should we use to evaluate machine learning models built for conversational analytics? Join me next week when I answer just that, as we discuss the tools used to expose the nuances that accuracy fails to uncover.